Model Context Protocol for Dummies

The Model Context Protocol (MCP) is designed to solve external data access for modern AI assistants.

The Model Context Protocol (MCP) is designed to solve a crucial problem for modern AI assistants: accessing external data. While powerful, AI models are limited by the knowledge they were trained on. They need a way to tap into real-time information from various sources like databases, code repositories, cloud storage, and other applications to provide truly useful and context-aware responses. MCP provides that bridge.

Think of it like this: an AI is a brilliant student, but it's stuck in a library with only a limited set of books. MCP is like giving that student a library card and internet access, allowing them to explore and learn from a vast universe of information beyond the initial set of books.

Here's a breakdown of MCP's key elements and their roles:

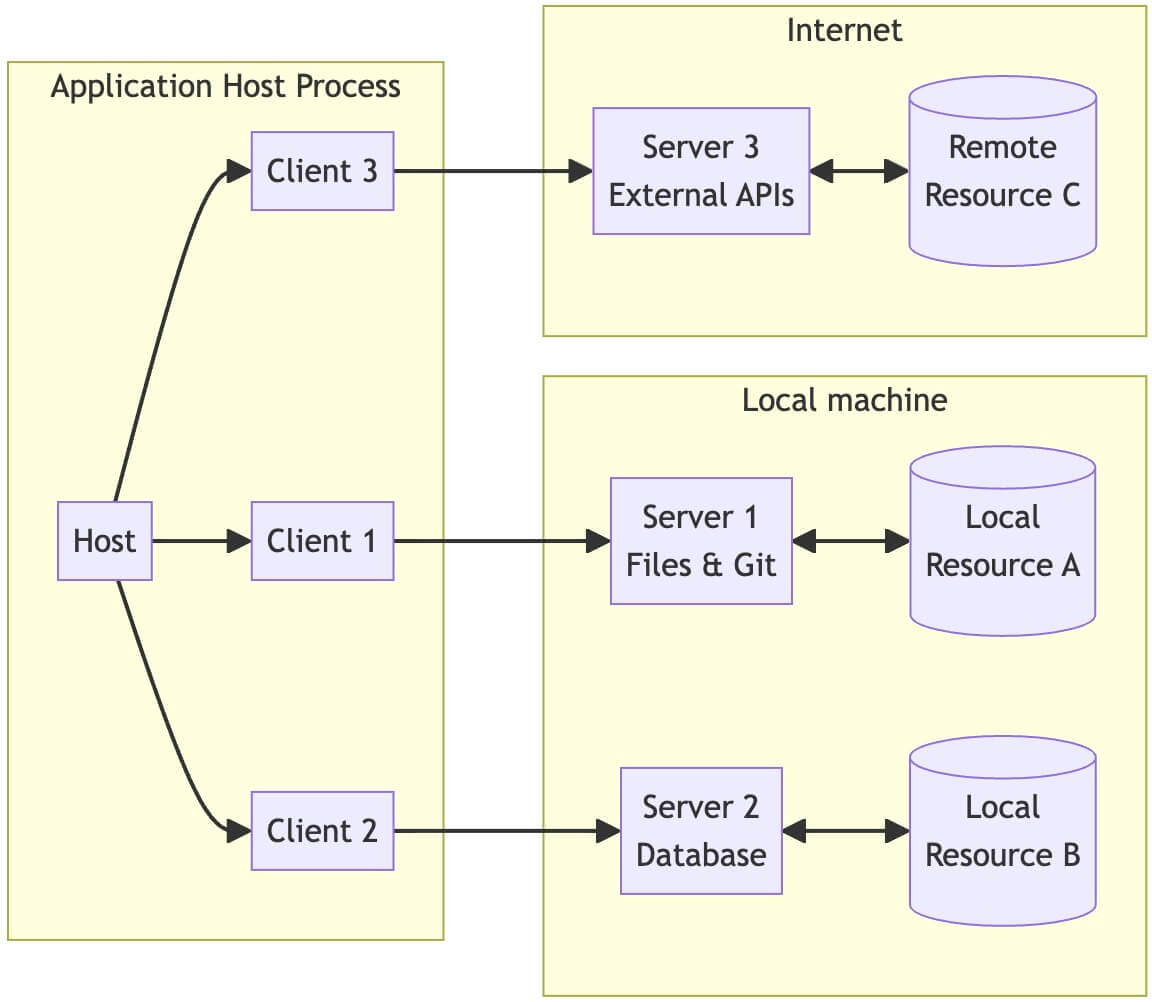

The Protocol Specification: This is the blueprint, the set of rules that define how MCP clients (AI assistants) and MCP servers (data sources) communicate. It's like the language they both agree to speak, ensuring they understand each other. This specification ensures consistency and interoperability.

MCP Servers: These act as gateways to specific data sources. An MCP server for Google Drive, for example, knows how to retrieve files and information from Google Drive in a way the AI assistant can understand. Each data source needs its own MCP server, acting as a translator between the data source's specific format and the standardized MCP format. They handle authentication, data retrieval, and formatting.

MCP Clients: These are the AI assistants or tools that want to access external data. They send requests to MCP servers according to the protocol specification, asking for specific information. They receive responses from the servers and use that information to enhance their understanding and responses.

SDKs (Software Development Kits): These are tools that make it easier for developers to build MCP clients and servers. They provide pre-built functions and code libraries, reducing the amount of work needed to integrate with MCP. Imagine them as pre-fabricated building blocks, allowing developers to focus on the specific functionality of their applications rather than the low-level details of the protocol.

Open-Source Repositories: These are publicly available collections of code for MCP servers and other related tools. This fosters collaboration and allows developers to build upon each other's work, accelerating the development of the MCP ecosystem. It also means developers don't have to start from scratch every time they want to connect to a new data source; someone might have already built an MCP server for it.

In short, how it works:

- An AI assistant (MCP client) needs information.

- It sends a request to the appropriate MCP server (e.g., the Google Drive MCP server).

- The MCP server authenticates the request, retrieves the data from Google Drive, and formats it according to the MCP specification.

- The MCP server sends the formatted data back to the AI assistant.

- The AI assistant uses this data to provide a more informed and contextually relevant response.

The goal of MCP is to create a universal standard for connecting AI to any data source. This eliminates the need for custom integrations for every new data source, making it easier and more scalable to build truly connected and intelligent AI systems. As more developers adopt MCP and contribute to the open-source ecosystem, AI assistants will become increasingly powerful and useful, capable of leveraging a vast and ever-growing network of information.

Some useful links for further reading:

- Model Context Protocol Website

- MCP Specification

- MCP GitHub Repository

- Maintained MCP Servers

- Anthropic's introductory blog post on MCP

Lazhar Ichir

Lazhar Ichir is the CEO and founder of Modelmetry, an LLM guardrails and observability platform that helps developers build secure and reliable modern LLM-powered applications.